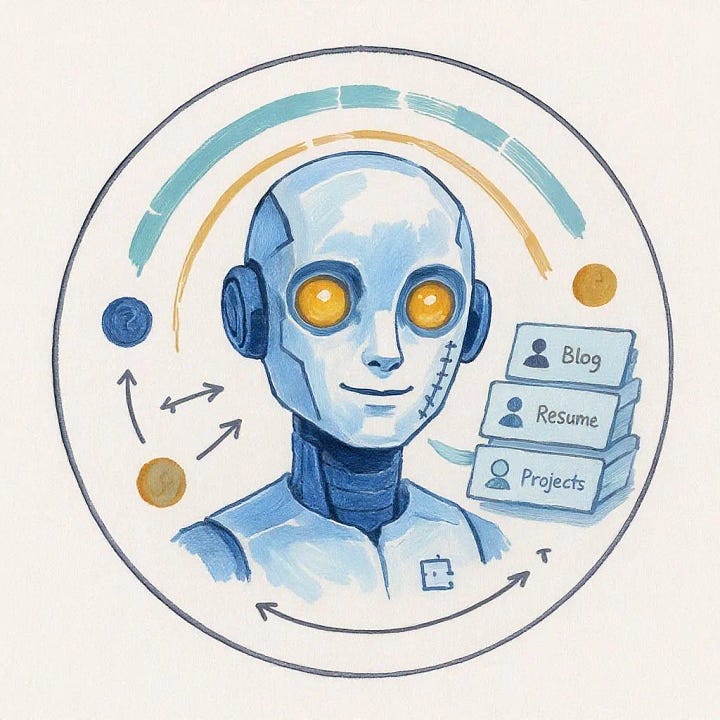

The Making of bot-drei 😎

Fine-tuning an LLM and building a custom RAG pipeline

Intro

Hi everyone,

Hope you are all doing well! I have been well: I have more time for running again, the weather is beautiful, and I have been reading plenty. Here’s what I’ve been up to:

I’ve also had time to continue learning through fun projects, and I have one to share with you all today! I made my very own bot… meet bot-drei!

Why?

I wanted to learn about model fine-tuning and RAG by doing. Building an AI version of myself seemed like a great place to start: personal enough to notice what worked and what didn’t, and I figured I had enough training data. I wanted to make bot-drei!1

Going in, I assumed that fine-tuning would be the hard part, and require loads of data. RAG across my blog posts and website? Should be simple enough - that sounds like a product problem!

Turns out the hard part wasn’t where I expected.

The question that emerged: how do you balance personality with accuracy when your knowledge base is actually pretty small? Give the model too much freedom and it hallucinates.2 Lock it down too hard and it becomes a glorified search engine with no personality. I wanted to find a middle path.

Fine-Tuning (making it sound like me)

This turned out to be straightforward. 10 minutes to train a small open-source model on 100-200 examples of my writing using LoRA on my Windows desktop with an old Nvidia GPU.3 Training, merging weights, changing model formats, all done flawlessly thanks to Unsloth.

LoRA (Low-Rank Adaptation) works by freezing almost all of a model’s existing weights and training only a few small “adapter” matrices that sit inside its attention layers. Instead of updating billions of parameters, it learns a low-rank approximation, basically a compact patch that nudges how the model represents relationships between words. That’s why you can fine-tune a 4B-parameter model4 so quickly: you’re only training a few million parameters while keeping everything else intact. When you merge the LoRA into the base model later, it behaves as if it had been fine-tuned. Kind of like tailoring a piece of clothing.

The model picked up my patterns, email and text style, and how I structure ideas. That was the easy part.

The Routing Problem

Once I had a model that sounded like me, I needed it to answer questions about my actual work without making things up. The system routes queries based on how confident it is about retrieved context:

Pre-filtering catches greetings and short queries - skip RAG, respond conversationally with personality.

Full pipeline handles substantive questions through query expansion, intent detection, hybrid retrieval, and confidence-based response generation.

Three confidence bands determine response style:

High (≥0.6): authoritative synthesis

Medium (0.4-0.6): quote and contextualize with explicit limitations

Low (<0.4): conversational fallback, honest about what the system can’t answer

Building the RAG System

This took many iterations across a few days. Getting the balance right was difficult, especially since measurement isn’t straightforward.

I also learned that my knowledge base is small: 10 blog posts, resume, LinkedIn profile, website content. This constraint created a core driving tension behind this system: let the fine-tuned model’s personality run free and it fills gaps with hallucination, or constrain it too hard to retrieval and you get a search engine that responds to your name…

How queries get processed

Query expansion adds synonyms and related terms. Someone asks about “projects” and the system searches for “work,” “portfolio,” “experience” too.

Intent detection routes questions to the right content types, like resume entries, blog posts, project descriptions. Metadata filtering (tags) prevents weird cross-contamination (so resume queries don’t surface personal blog posts).

Hybrid retrieval (the interesting part)

I use two complementary methods because each catches what the other misses:

Dense embeddings (70% weight) handle semantic similarity. When someone asks “what has Andrei built?”, embeddings find content about projects and experience even if those exact words don’t appear. It understands meaning through vector distance (cosine similarity).

Sparse BM25 (30% weight) catches exact keyword matches. If someone asks about “Sfânt” or “fine-tuning”, BM25 ensures content with those specific terms ranks highly. Pure semantic search sometimes misses exact technical terms or proper nouns.

The system combines both result sets with weighted scores, applies time decay5 to favor recent content, then reranks based on intent match. Retrieves up to 50 candidates, narrows to the 3 most relevant.

Confidence

The confidence score drives how the model responds:

High confidence (≥0.6): Temperature drops to 0.3, responses up to 1500 tokens. Authoritative synthesis in my voice, direct information delivery.

Medium confidence (0.4-0.6): Same parameters but explicitly acknowledge limitations. Quote relevant passages and contextualize them.

Low confidence (<0.4): Temperature back to 0.6, 500 tokens max. Skip RAG context injection entirely, respond conversationally, be honest about limitations. This happens a lot when people ask about things completely outside my content.

Chunking Strategy (surprisingly important)

Chunking determines how much context the model sees for each retrieval. Too small and you lose the thread of an argument. Too large and the embedding becomes generic, retrieval accuracy drops, and you waste tokens on irrelevant text.

Different content needs different treatment:

Resume entries: no chunking. Job descriptions are already bulleted and summarized, they need full context.

Projects and experience: 1000-token chunks. Structured portfolio data loses meaning when fragmented.

Blog posts: 500 tokens with 100-token overlap, preserving heading boundaries so I don’t split mid-thought.

Deployment (pretty annoying)

I wanted to host the GGUF model somewhere with OpenAI-compatible API endpoints without spending hundreds of dollars. I tested locally with LM Studio and liked that pattern - no need to convert model formats.

I tried RunPod first. Unfortunately, their sign-up system bugged out processing my $150 minimum (!) deposit multiple times. Even when I got past that, I couldn’t actually deploy: they had no inventory of any GPUs under a few dollars per hour, and even those wouldn’t spin up correctly. After a day of troubleshooting, I gave up.

Hugging Face Inference Endpoints turned out to be significantly easier. It is more expensive per hour than RunPod’s cheapest plan would have been, but the simplicity trade-off was worth it. After uploading my model, I can start/stop the endpoint on command through their UI, which keeps costs manageable.

What I learned

Fine-tuning is straightforward now. Even with minimal background in the underlying math, the tooling works and guides are plentiful. With a decent GPU (or Google Colab credits), you can adapt a model to your voice in minutes. It took longer to format my training data than actually train.

Creating a useful RAG pipeline is harder than I thought! You’re constantly fighting the model’s prior - it wants to complete patterns from training on billions of tokens. Getting it to stick to a few pages of writing and a resume without losing the model’s voice entirely is tough.

I think the answer isn’t choosing personality or accuracy. It’s smart, contextual routing: personality for greetings, precision for facts, honesty when confidence is low.

And finally, the result: personality when wanted, precision (with clickable sources!) when required, honesty when neither fits. The model sounds like me and (mostly) tells the truth about me!

Try it on my site at andrei.bio/chat.

Other considered names: NPCosmin, AndreiGPT, AndreiBot

A Qwen model I fine tuned really thought I was Russian and studied all sorts of fancy things in Moscow!

I did run out of credits on Google Colab pretty quickly, I didn’t successfully get a model out from there since setting up the environment wasted a lot of my GPU runtime.

I tuned both Phi3 and Qwen2-3b, using Qwen in production

(α = 0.001/day, floor 0.1)

Love this perspective! That bit about balancing personality with accuracy when the knowledge base is small realy hit home. It's such a core challenge in building useful bots.